On the Diversity and Limits of Human Explanations

Chenhao Tan.

In Proceedings of NAACL 2022.

Abstract:

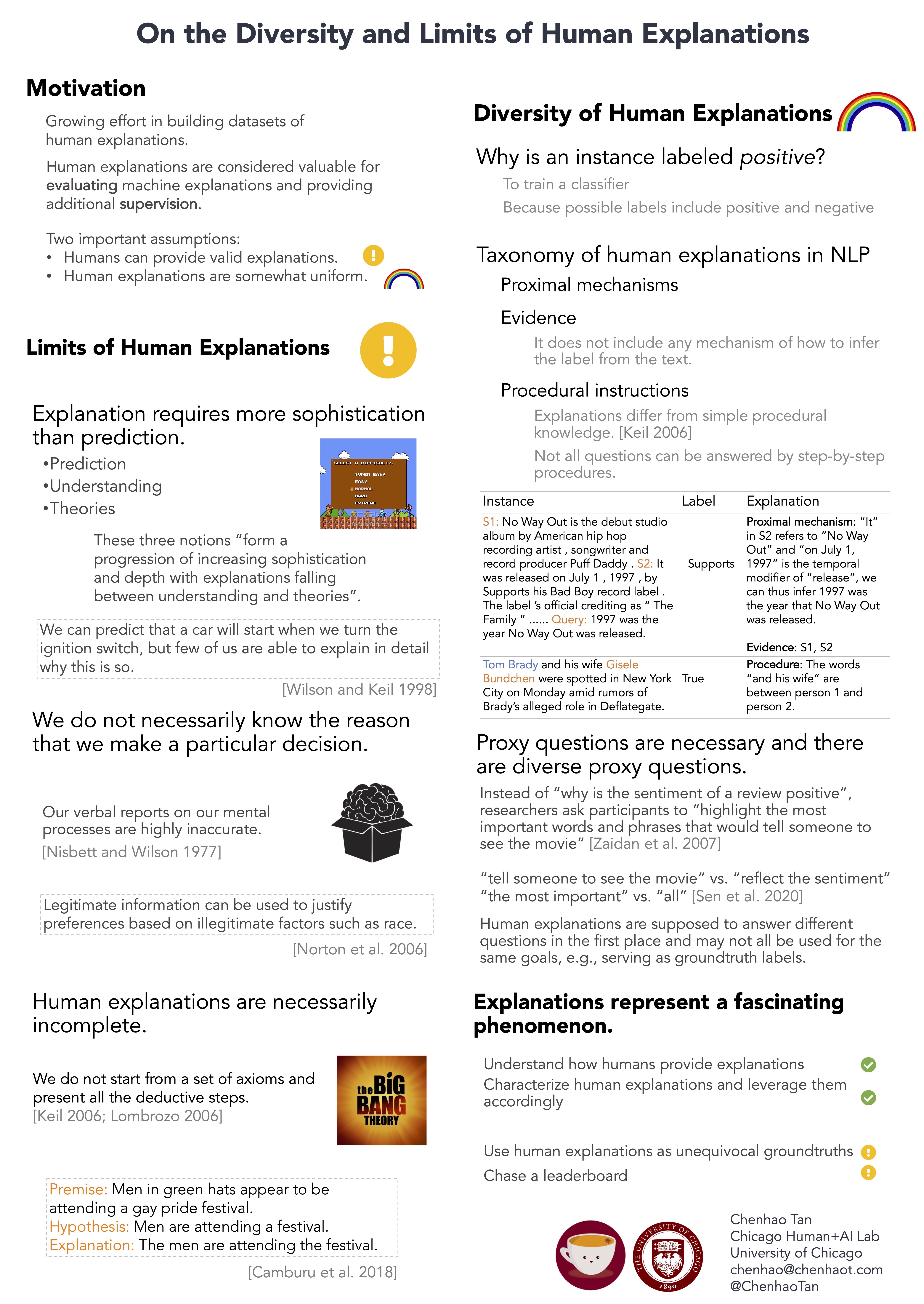

A growing effort in NLP aims to build datasets of human explanations. However, it remains unclear whether these datasets serve their intended goals. This problem is exacerbated by the fact that the term explanation is overloaded and refers to a broad range of notions with different properties and ramifications. Our goal is to provide an overview of the diversity of explanations, discuss human limitations in providing explanations, and ultimately provide implications for collecting and using human explanations in NLP.

Inspired by prior work in psychology and cognitive sciences, we group existing human explanations in NLP into three categories: proximal mechanism, evidence, and procedure. These three types differ in nature and have im- plications for the resultant explanations. For instance, procedure is not considered explana- tion in psychology and connects with a rich body of work on learning from instructions. The diversity of explanations is further evi- denced by proxy questions that are needed for annotators to interpret and answer “why is [input] assigned [label]”. Finally, giving ex- planations may require different, often deeper, understandings than predictions, which casts doubt on whether humans can provide valid ex- planations in some tasks.

@inproceedings{tan:22,

author = {Chenhao Tan},

title = {On the Diversity and Limits of Human Explanations},

year = {2022},

booktitle = {Proceedings of NAACL (short papers)}

}