What Gets Echoed? Understanding the 'Pointers' in Explanations of Persuasive Arguments

David Atkinson, Kumar Bhargav Srinivasan, and Chenhao Tan.

In Proceedings of 2019 Conference on Empirical Methods in Natural Language Processing and 9th International Joint Conference on Natural Language Processing (EMNLP'2019)

Abstract:

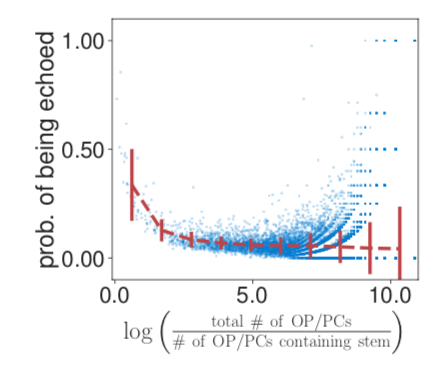

Explanations are central to everyday life, and are a topic of growing interest in the AI community. To investigate the process of providing natural language explanations, we leverage the dynamics of the /r/ChangeMyView subreddit to build a dataset with 36K naturally occurring explanations of why an argument is persuasive. We propose a novel word-level prediction task to investigate how explanations selectively reuse, or echo, information from what is being explained (henceforth, explanandum). We develop features to capture the properties of a word in the explanandum, and show that our proposed features not only have relatively strong predictive power on the echoing of a word in an explanation, but also enhance neural methods of generating explanations. In particular, while the non-contextual properties of a word itself are more valuable for stopwords, the interaction between the constituent parts of an explanandum is crucial in predicting the echoing of content words. We also find intriguing patterns of a word being echoed. For example, although nouns are generally less likely to be echoed, subjects and objects can, depending on their source, be more likely to be echoed in the explanations.

[PDF] [Code & Data][Slides]

@inproceedings{atkinson+srinivasan+tan:19,

author = {David Atkinson and Kumar Bhargav Srinivasan and Chenhao Tan},

title = {What Gets Echoed? Understanding the 'Pointers' in Explanations of Persuasive Arguments},

year = {2019},

booktitle = {Proceedings of EMNLP}

}