Many Faces of Feature Importance: Comparing Built-in and Post-hoc Feature Importance in Text Classification

Vivian Lai, Jon Z. Cai, and Chenhao Tan.

In Proceedings of 2019 Conference on Empirical Methods in Natural Language Processing and 9th International Joint Conference on Natural Language Processing (EMNLP'2019)

Abstract:

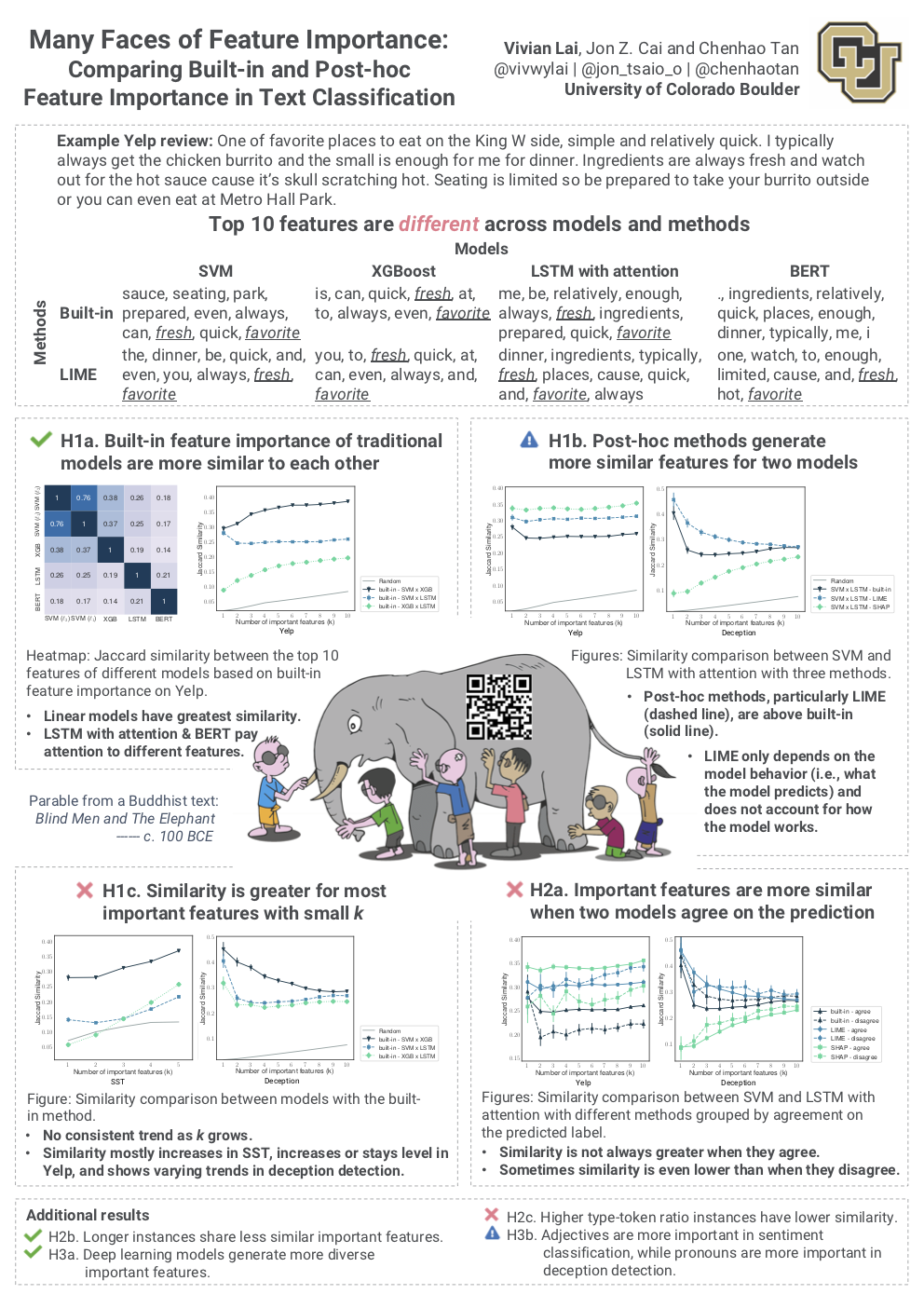

Feature importance is commonly used to explain machine predictions. While feature importance can be derived from a machine learning model with a variety of methods, the consistency of feature importance via different methods remains understudied. In this work, we systematically compare feature importance from built-in mechanisms in a model such as attention values and post-hoc methods that approximate model behavior such as LIME. Using text classification as a testbed, we find that 1) no matter which method we use, important features from traditional models such as SVM and XGBoost are more similar with each other, than with deep learning models; 2) post-hoc methods tend to generate more similar important features for two models than built-in methods. We further demonstrate how such similarity varies across instances. Notably, important features do not always resemble each other better when two models agree on the predicted label than when they disagree.

@inproceedings{lai+cai+tan:19,

author = {Vivian Lai and Jon Z. Cai and Chenhao Tan},

title = {Many Faces of Feature Importance: Comparing Built-in and Post-hoc Feature Importance in Text Classification},

year = {2019},

booktitle = {Proceedings of EMNLP}

}